The Riak key-value database: I like it

(Note: This is a writeup I did a few years ago when evaluating Riak KV as a possible data store for a high-traffic CMS. At the time, the product was called simply “Riak”. Naturally, other details may be out of date as well.)

Riak is a distributed, key/value store written in Erlang. It is open source but supported by a commercial company, Basho.

Its design is based on an Amazon creation called Dynamo, which is described in a 200-page paper published by Amazon. The engineers at Basho used this paper to guide the design of Riak.

Its scalability and fault-tolerance derive from the fact that all Riak nodes are full peers – there are no “primary” or “replica” nodes. If a node goes down, its data is already on other nodes, and the distributed hashing system will take care of populating any fresh node added to the cluster (whether it is replacing a dead one or being added to improve capacity).

In terms of Brewer’s “CAP theorem,” Riak sacrifices immediate consistency in favor of the two other factors: availability, and robustness in the face of network partition (i.e. servers becoming unavailable). Riak promises “eventual consistency” across all nodes for data writes. Its “vector clocks” feature stores metadata that tracks modifications to values, to help deal with transient situations where different nodes have different values for a particular key.

Riak’s “Active Anti-Entropy” feature repairs corrupted data in the background (originally this was only done during reads, or via a manual repair command).

Queries that need to do more than simple key/value mapping can use Riak’s MapReduce implementation. Query functions can be written in Erlang, or Javascript (running on SpiderMonkey). The “map” step execution is distributed, running on nodes holding the needed data – maximizing parallelism and minimizing data transfer overhead. The “reduce” step is executed on a single node, the one where the job was invoked.

There is also a “Riak Search” engine that can be run on top of the basic Riak key/value store, providing fulltext searching (with the option of a Solr-like interface) while being simpler to use than MapReduce.

Technical details

Riak groups keys in namespaces called “buckets” (which are logical, rather than being tied to particular storage locations).

Riak has a first-class HTTP/REST API. It also has officially supported client libraries for Python, Java, Erlang, Ruby, and PHP, and unofficial libraries for C/C++ and Javascript. There is also a protocol buffers API.

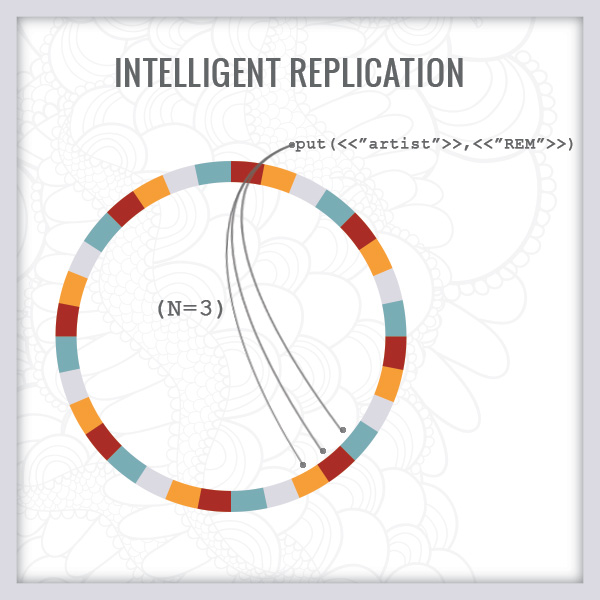

Riak distributes keys to nodes in the database cluster using a technique called “consistent hashing,” which prevents the need for wholesale data reshuffling when a node is added or removed from the cluster. This technique is more or less inherent to Dynamo-style distributed storage. It is also reportedly used by BitTorrent, Last.fm, and Akamai, among others.

Riak offers some tunable parameters for consistency and availability. E.g. you can say that when you read, you want a certain number of nodes to return matching values to confirm. These can even be varied per request if needed.

Riak’s default storage backend is “Bitcask.” This does not seem to be something that many users feel the need to change. One operational note related to Bitcask is that it can consume a lot of open file handles. For that reason Basho advises increasing the ulimit on machines running Riak.

Another storage backend is “LevelDB,” similar to Google’s BigTable. Its major selling point versus Bitcask seems to be that while Bitcask keeps all keys in memory at all times, LevelDB doesn’t need to. My guess based on our existing corpus of data is that this limitation of Bitcask is unlikely to be a problem.

Running Riak nodes can be accessed directly via the riak attach command, which drops you into an Erlang shell for that node.

Bob Ippolito of Mochi Media says: “When you choose an eventually consistent data store you’re prioritizing availability and partition tolerance over consistency, but this doesn’t mean your application has to be inconsistent. What it does mean is that you have to move your conflict resolution from writes to reads. Riak does almost all of the hard work for you…” The implication here is that our API implementation may include some code that ensures consistency at read time.

Operation

Riak is controlled primarily by two command-line tools, riak and riak-admin.

The riak tool is used to start or stop Riak nodes.

The riak-admin tool controls running nodes. It is used to create node clusters from running nodes, and to inspect the state of running clusters. It also offers backup and restore commands.

If a node dies, a process called “hinted handoff” kicks in. This takes care of redistributing data – as needed, not en masse – to other nodes in the cluster. Later, if the dead node is replaced, hinted handoff also guides updates to that node’s data, catching it up with writes that happened while it was offline.

Individual Riak nodes can be backed up while running (via standard utilities like cp, tar, or rsync), thanks to the append-only nature of the Bitcask data store. There is also a whole-cluster backup utility, but if this is run while the cluster is live there is of course risk that some writes that happen during the backup will be missed.

Riak upgrades can be deployed in a rolling fashion without taking down the cluster. Different versions of Riak will interoperate as you upgrade individual nodes.

Part of Basho’s business is “Riak Enterprise,” a hosted Riak solution. It includes multi-datacenter replication, 24x7 support, and various services for planning, installation, and deployment. Cost is $4,000 - $6,000 per node depending how many you buy.

Overall, low operations overhead seems to be a hallmark of Riak. This is both in day-to-day use and during scaling.

Suitability for use with our CMS

One of our goals is “store structured data, not presentation.” Riak fits well with this in that the stored values can be of any type – plain text, JSON, image data, BLOBs of any sort. Via the HTTP API, Content-Type headers can help API clients know what they’re getting.

If we decide we need to have Django talk to Riak directly, there is an existing “django-riak-engine” project we could take advantage of.

TastyPie, which powers our API, does not actually depend on the Django ORM. The TastyPie documentation actually features an example using Riak as data store.

The availability of client libraries for many popular languages could be advantageous, both for leveraging developer talent and for integrating with other parts of the stack.

Final thoughts

I am very impressed with Riak. It seems like an excellent choice for a data store for the CMS. It promises the performance needed for our consistently heavy traffic. It’s well established, so in using it we wouldn’t be dangerously out on the bleeding edge. It looks like it would be enjoyable to develop with, especially using the HTTP API. The low operations overhead is very appealing. And finally, it offers flexibility, scalability, and power that we will want and need for future projects.